AI Agents Have a Compliance Problem

Agent interoperability is great unless you’re in a regulated industry. A look at why Google's A2A collides with information barriers, data residency, and compliance reality.

If you've been following the AI space lately, you've probably come across the Agent2Agent Protocol (A2A) from Google. Every article celebrating it seems to say similar things: "breaking down silos," "seamless agent collaboration," "the end of fragmented enterprise systems." And I get it, the prospect is genuinely exciting. But here's the thing that's been nagging at me as someone who builds AI systems in one of the most heavily regulated industries: What if some of those silos are supposed to be there?

Spoiler Alert: They are!

What if breaking them down doesn't just create efficiency, it creates criminal liability?

This isn't a hypothetical concern. In financial services (my industry), healthcare, and legal services, many of the "silos" that A2A dissolves exist because regulators require them. They're not inefficiencies to be optimized away. They're compliance controls. And violating them can carry criminal penalties, fines, and the kind of reputational damage that ends careers.

So today, I want to have a real conversation about what happens when the promise of agent interoperability collides with the reality of regulated industries. This isn't an anti-A2A piece. I actually think the protocol is important and well-designed for many use cases. But the "break down all silos" narrative oversimplifies a genuinely complex problem. If you work in financial services, healthcare, legal, government, or any industry with significant compliance obligations, this one's for you. Let's get into it.

First, Let's Give A2A Its Due

Before I get into the complications, I want to be clear about what A2A is and why people are excited about it. If you've been anywhere on the internet lately, you've no doubt heard about "Agents", and you know that one of the key capabilities that makes agents powerful is collaboration: multiple agents working together to accomplish goals that no single agent could achieve alone.

The problem is that until recently, there was no standard way for agents built by different vendors, on different platforms, using different frameworks, to actually talk to each other. If you built an agent using LangChain and I built one using CrewAI, getting them to collaborate required custom integration work. Multiply that across an enterprise with dozens of AI initiatives, and you've got a fragmentation nightmare. Some companies have addressed this by building their own internal, vendor-agnostic wrappers.

A2A solves this at a protocol level. Launched by Google in April 2025 and, as of June 2025, governed by the Linux Foundation with backing from more than 100 organizations including Google, AWS, Cisco, Microsoft, Salesforce, SAP, and ServiceNow, it's a standardized protocol that allows AI agents to discover each other, communicate, delegate tasks, and collaborate, regardless of who built them or what framework they use.

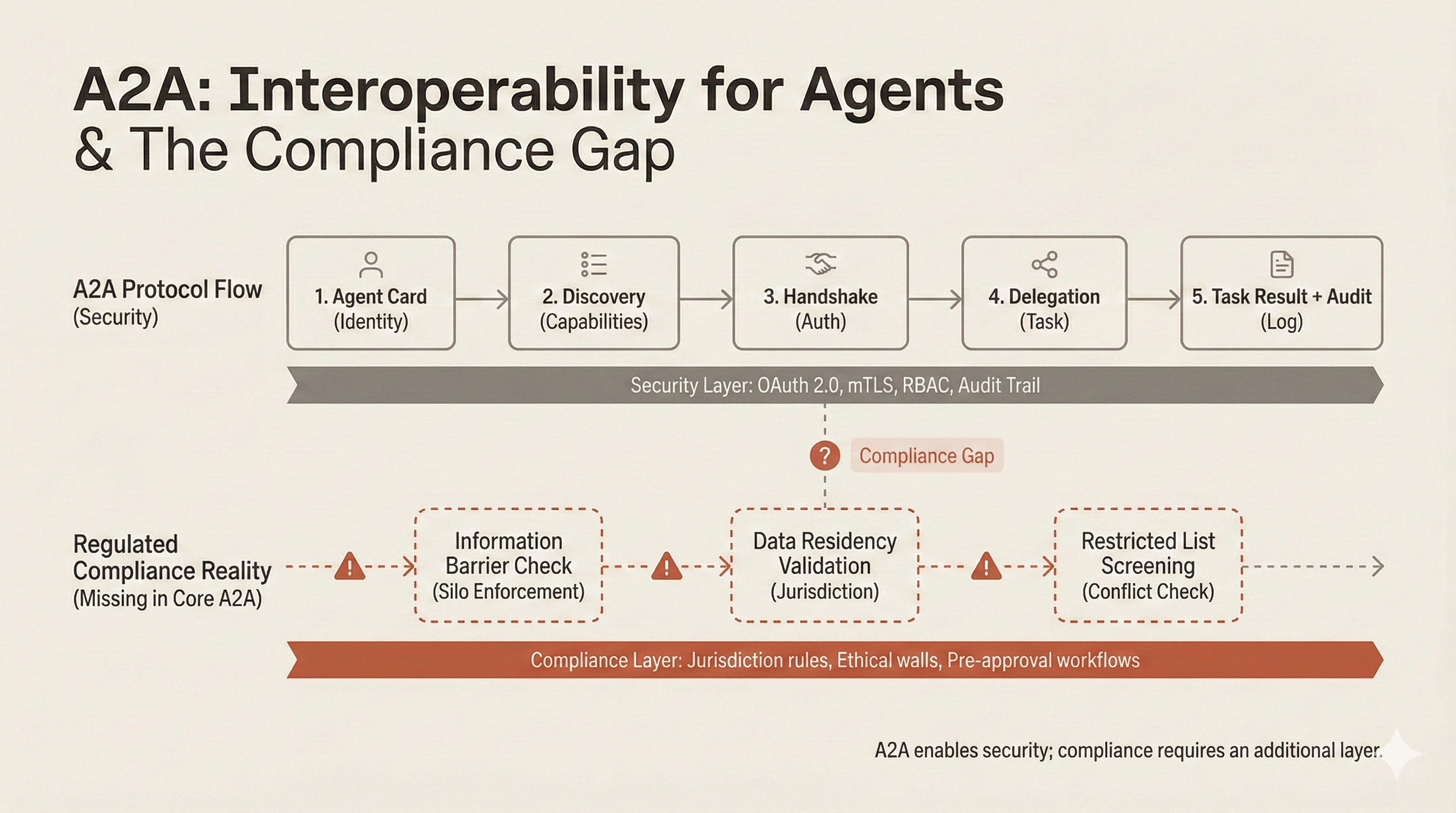

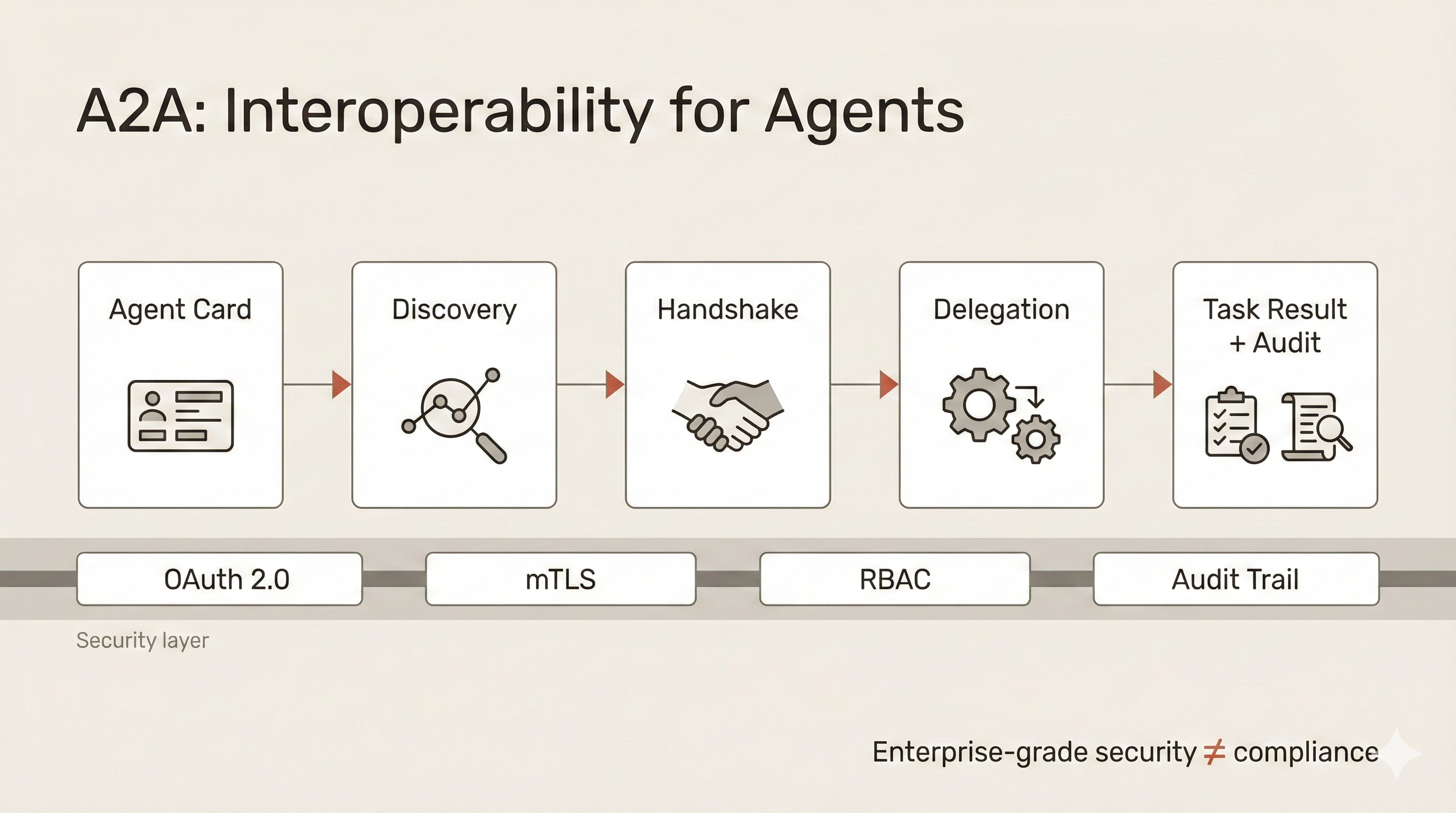

The protocol introduces some elegant concepts. Agents publish "Agent Cards" describing their capabilities, enabling automatic discovery. They communicate over HTTP and gRPC with JSON payloads, which means existing enterprise security infrastructure applies. The specification includes OAuth 2.0, mutual TLS, role-aware access control, and audit trails in the reference implementations. This is enterprise-grade stuff, built by people who understand enterprise requirements.

And here's the thing: for most enterprises, this is exactly what they need. If your agents need to collaborate within a single business unit, or across units without regulatory constraints, A2A is a significant step forward. The protocol treats agents as standard enterprise applications, leveraging established web security practices. That's smart design.

But regulated industries aren't most enterprises. And that's where it gets interesting.

The Silos Nobody Talks About (Skip if you're familiar with Information Barriers)

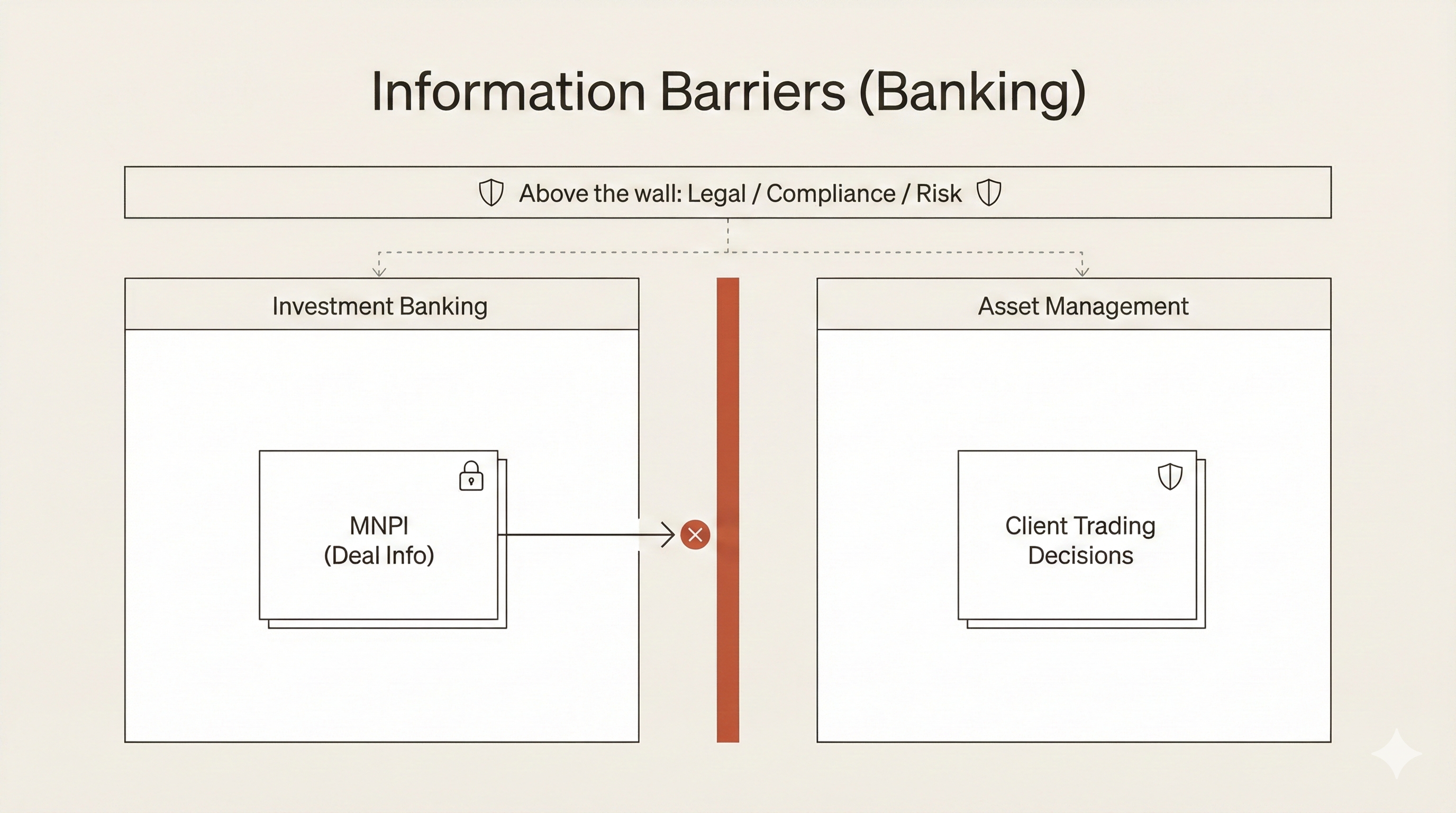

Let me introduce you to a concept that might be unfamiliar if you don't work in financial services: information barriers.

You might have heard the older term "Chinese walls." The UK's FCA retired that terminology in 2021, but the concept remains the same and I don't think these barriers are going anywhere.

Here's why they exist. Imagine a large bank with an Investment Banking division and an Asset Management division. Investment Banking is advising TechCorp on a confidential acquisition: material, non-public information (MNPI) that would move markets if it leaked. Meanwhile, Asset Management is making investment decisions on behalf of clients, including decisions about whether to buy or sell TechCorp stock.

If information flows from Investment Banking to Asset Management, even inadvertently, you've got insider trading. Not "oops, we made a compliance error" insider trading. The kind that results in criminal prosecutions, multi-million dollar fines, and people going to prison.

This isn't theoretical. The SEC requires broker-dealers to maintain written policies and procedures reasonably designed to prevent the misuse of material nonpublic information (Exchange Act Section 15(g) and Advisers Act Section 204A). The FCA has similar requirements and regularly warns that many firms struggle to build legally compliant information barriers. The regulatory expectation is that these walls are real, enforced, and auditable.

So how do these barriers actually work in practice? Physical separation: departments on different floors or in different buildings. System-level access controls that prevent certain employees from accessing certain databases. Communication protocols that define who can talk to whom and under what circumstances. "Restricted lists" of securities that employees can't trade. Monitoring systems (increasingly AI-powered, ironically) that scan communications for sensitive keywords and patterns.

There's also the concept of being "above the wall." Certain roles like Legal, Compliance, Risk, and Senior Management need access to both sides. These individuals face heightened scrutiny, can't advise on specific transactions, must be "particularly careful" about disclosure, and are often precluded from personal trading in affected securities.

Here's the key insight: these aren't organizational inefficiencies. They're legally required controls with criminal penalties for violation.

And it's not just information barriers. GDPR requires data protection safeguards for any transfer outside the EU, with penalties up to 4% of global annual revenue. China's PIPL imposes localization for certain categories of data and strict security assessments for cross-border transfers. India's RBI mandates that payment and banking system data be stored locally. The EU AI Act introduces risk-based regulations for AI systems, with high-risk systems requiring quality management, documentation, and audit trails, with penalties up to 7% of global turnover.

These regulations didn't emerge because regulators enjoy making life difficult. They emerged because the absence of these controls led to real harm: market manipulation, privacy violations, systemic risks. The silos exist for a reason.

Where A2A Meets Regulatory Compliance Reality

Now let's get specific about the collision points. These are questions that anyone implementing A2A in a regulated environment will have to answer.

The Discovery Problem

A2A's Agent Cards are genuinely clever. Agents publish descriptions of their capabilities, enabling automatic discovery and collaboration. But think about what this means in a bank with information barriers.

An investment banking agent advertising "M&A deal analysis for Company X" to all internal agents would violate information barrier principles. The very existence of that capability, the fact that the bank is working on a deal with Company X, is often material non-public information. In traditional systems, this is managed through compartmentalization. People on the retail banking side simply don't know what deals are happening on the investment banking side.

With A2A discovery as currently designed, agents can discover any other agent they can authenticate with. There's no concept of compliance boundaries scoping what gets discovered by whom. The questions this raises aren't trivial: How do Agent Cards interact with information barrier requirements? Should discovery be scoped by compliance boundaries? What metadata is safe to expose?

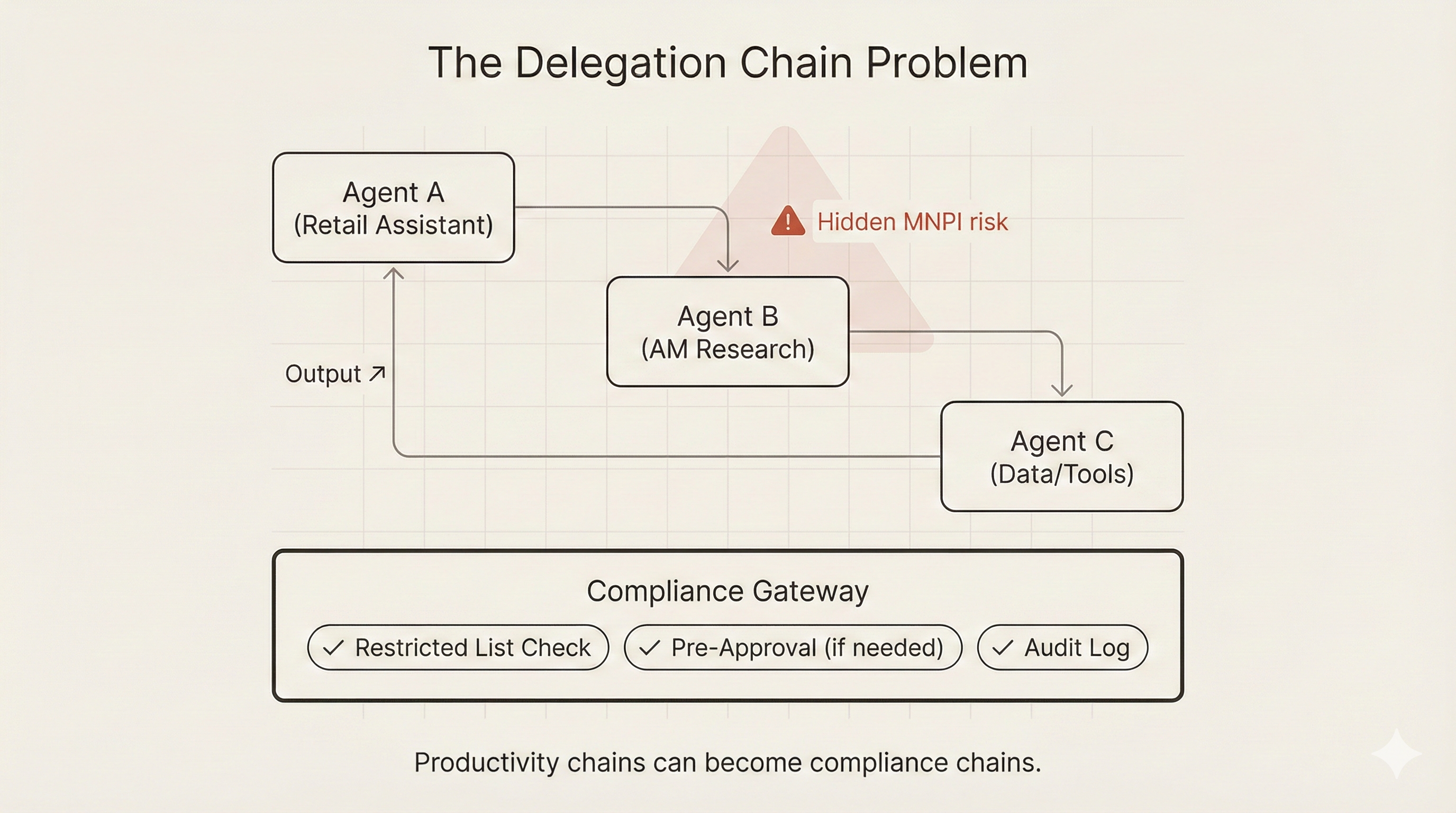

The Delegation Chain Problem

A2A enables powerful multi-agent workflows where Agent A delegates to Agent B, which delegates to Agent C. Beautiful for productivity. Bad for compliance.

Let me paint a picture. A retail banking customer asks their AI assistant: "Should I invest in TechCorp stock?" The retail agent recognizes it needs investment analysis. It discovers that an Asset Management agent has equity research capabilities. It delegates the analysis task. The Asset Management agent returns a recommendation. The customer gets personalized advice.

Sounds great, right? But what if the bank's Investment Banking division is advising TechCorp on an undisclosed M&A deal? The bank should be on a restricted list. The recommendation from Asset Management could be influenced by non-public knowledge if barriers aren't maintained at the agent level. The delegation created a communication trail that compliance must monitor. If the Asset Management agent's training data included Investment Banking deal information, the recommendation is tainted regardless of real-time controls.

The delegation chain creates information flow that may not be visible to traditional compliance monitoring, which was designed for human-to-human communication. Who is accountable when Agent C returns material non-public information to Agent A via Agent B? Can delegation chains be pre-approved by compliance? How do you maintain audit trails that a regulator would accept?

The Cross-Border Problem

A2A agents communicate via standard HTTP and gRPC regardless of location. That's a feature for interoperability and a nightmare for data residency compliance.

Consider: your Agent A in London delegates to Agent B in New York, which queries Agent C's data in Singapore. You've potentially triggered three regulatory frameworks in a single interaction. GDPR requires safeguards for the EU-to-US transfer. Singapore's PDPA has its own requirements. If personal data is involved, each jurisdiction may have different consent requirements, retention rules, and individual rights.

The A2A specification doesn't include data residency metadata. Agent Cards don't describe where data is stored or processed. The protocol operates as if the internet is borderless, which it is, but the law very much isn't.

The Context Accumulation Problem

This one is subtle, but important. A2A agents maintain context and can engage in multi-turn negotiations about task requirements. That's necessary for sophisticated collaboration. But context accumulation can create unintended information sharing even when no explicit data transfer occurs.

If an agent "learns" patterns from interactions with both sides of a wall, it may inadvertently leak information through its behavior or recommendations. Imagine an agent that handles requests from both Investment Banking and Asset Management (a compliance failure in itself, but let's say it happens). Over time, it develops intuitions about which companies are "interesting." Those intuitions are encoded in its responses, potentially revealing information about undisclosed deals.

How do you ensure agents don't accumulate context across compliance boundaries? Should agents have "memory barriers" that mirror organizational information barriers? How do you audit what an agent has "learned" versus what it was explicitly told?

The Third-Party Problem

A2A enables enterprises to integrate best-in-class agents from external vendors. That's powerful, but external agents become data processors under GDPR. They may not honor data sovereignty requirements. Their training data provenance is unknown. Regulatory expectations for "knowing your AI" (model governance) extend to third-party systems.

What due diligence is required for external A2A agents? How do you extend information barrier policies to third parties? Can you contractually require external agents to respect your compliance controls, and more importantly, can you verify they actually do?

What the Spec Gets Right

I've spent a lot of words on problems. Let me be clear that A2A isn't a poorly designed protocol. It's actually very thoughtful about enterprise requirements. It just wasn't designed specifically for the most regulated industries, and that needs to be kept in mind when considering adopting it as a standard.

The protocol is built on familiar standards. HTTP, gRPC, and JSON payloads, plus OAuth 2.0, which means existing enterprise security infrastructure applies. Your current identity management, network security, and monitoring tools don't become obsolete.

Opacity is built into the design. Agents collaborate without exposing internal state, memory, or tools. This helps with intellectual property protection and means agents aren't forced to reveal more than necessary for task completion.

The architecture is audit-friendly. Structured tasks with clear states (submitted, working, completed) are more auditable than ad-hoc integrations. You can trace what happened.

And enterprise backing matters. With major enterprises involved in specification development, enterprise concerns are heard. The protocol isn't being designed in an academic vacuum.

The specification explicitly acknowledges that "A2A treats agents as standard enterprise applications, relying on established web security practices." That's actually the right approach for most organizations.

What's Missing

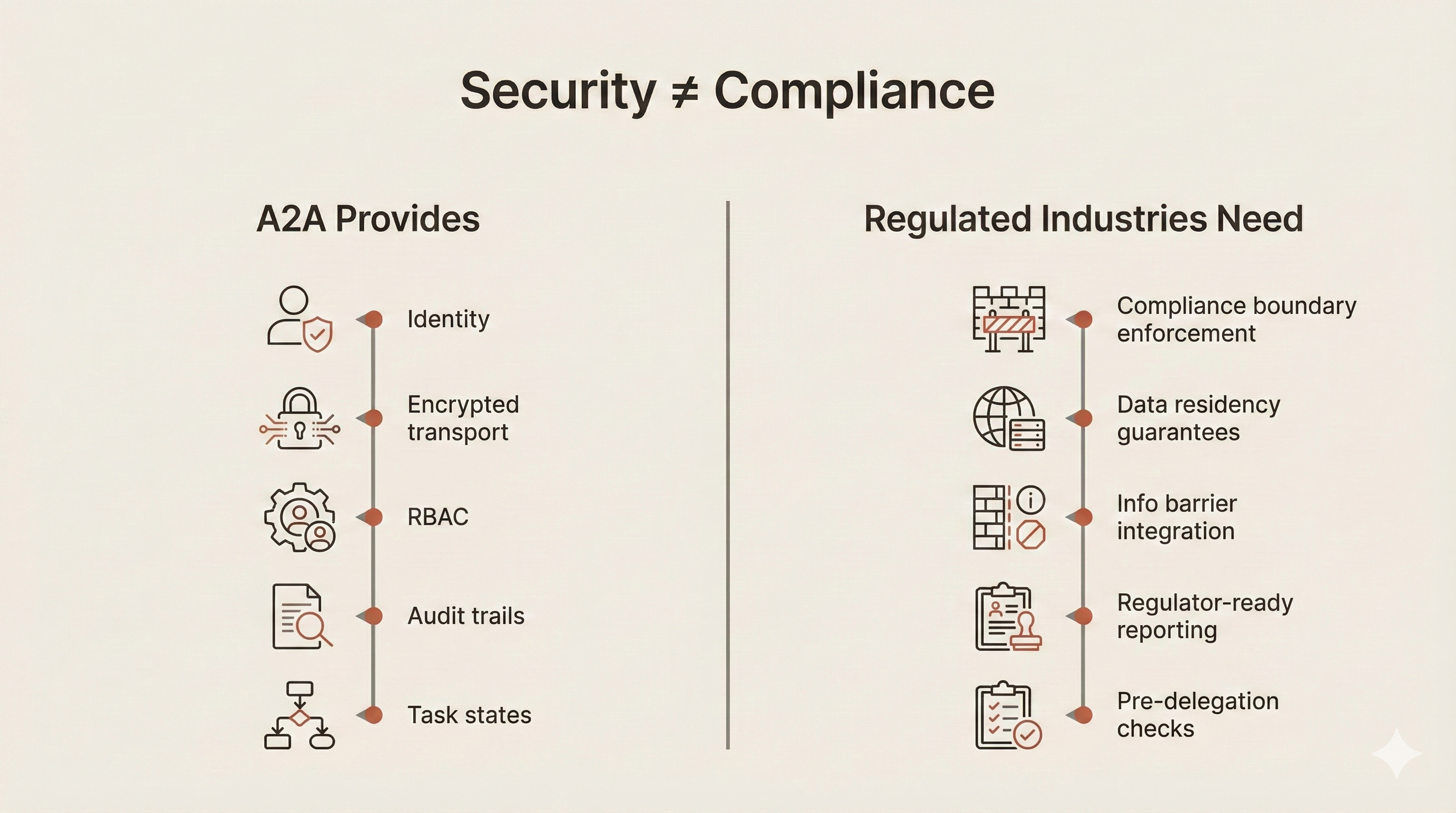

Security isn't the same as compliance. A2A provides robust security features: authentication, authorization, encrypted transport, audit trails. But regulated industries need something different:

| A2A Provides | Regulated Industries Need |

|---|---|

| Agent identity verification | Compliance boundary enforcement |

| Encrypted transport | Data residency guarantees |

| Role-based access | Information barrier integration |

| Audit trails | Regulatory reporting formats |

| Task management | Pre-delegation compliance checks |

| Capability discovery | Scoped discovery respecting walls |

The current specification has notable gaps for regulated use cases. There's no concept of compliance boundaries, so agents can discover and delegate to any agent they can authenticate with. There's no data residency metadata, meaning Agent Cards don't describe where data is stored or processed. There's no restricted list integration, no mechanism to check if a delegation would violate trading restrictions. There's no regulatory jurisdiction information, so agents don't advertise which regulatory frameworks they operate under. And there's no delegation approval workflow, meaning all delegations are automatic once authenticated.

These aren't criticisms of the protocol's design philosophy. They're observations about scope. A2A is a communication protocol. Making it also a compliance framework would probably make it too complex for general use. But for regulated industries, we need to build a compliance layer.

So What Should You Actually Do?

If you work in a regulated industry and you're evaluating A2A, here's my practical guidance. Don't avoid it. That would be throwing the baby out with the bathwater. Rather, be thoughtful about adopting it.

Map Your Compliance Boundaries First

Before you think about A2A at all, document your current state. What information barriers exist and what's their legal basis? What data residency requirements apply by jurisdiction? How do restricted list and watch list processes work? What cross-entity data sharing agreements are in place?

You can't build compliance controls for agents if you don't understand your compliance requirements for humans. Most organizations have this documented somewhere, but it's often fragmented across Legal, Compliance, and IT. Consolidate it.

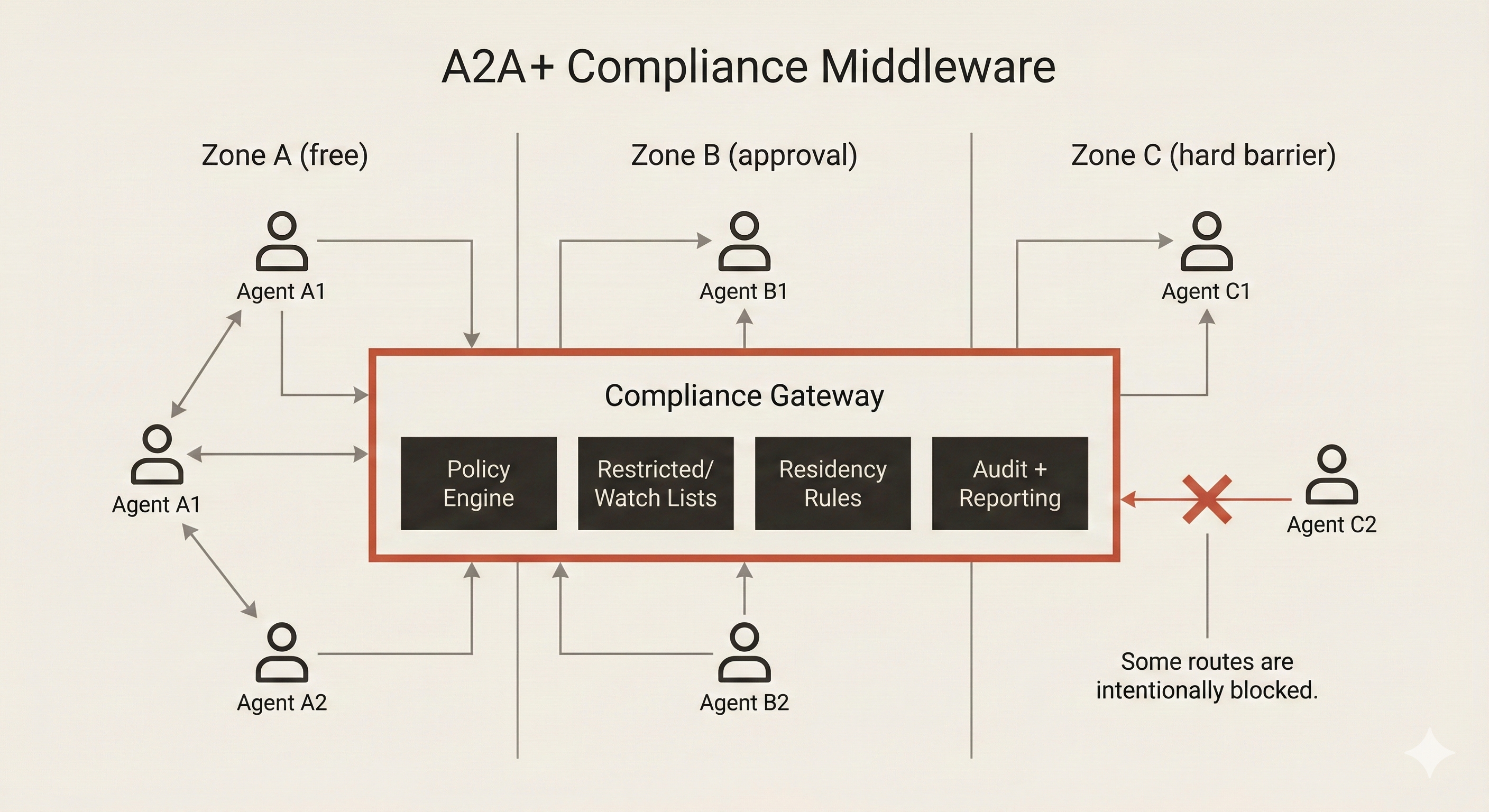

Define Agent Compliance Zones

Create a classification system for where agents can operate:

Zone A: Agents that can freely communicate with each other, with no compliance boundaries between them.

Zone B: Agents that require pre-approval for cross-zone communication. They can talk, but someone needs to okay it first.

Zone C: Agents that must never communicate with certain other zones: hard barriers.

Implement A2A discovery and delegation with zone awareness. This might mean running multiple A2A deployments with limited cross-deployment connectivity, or building a compliance gateway layer.

Build Compliance Middleware

Consider a compliance gateway that sits between agents and does the regulatory heavy lifting. Before allowing a delegation, it checks restricted and watch lists. It validates data residency requirements for the data involved. It logs all cross-boundary communications in formats that satisfy regulatory reporting. It enforces approval workflows where required. It integrates with your existing compliance monitoring tools.

This is extra work, and it's not what A2A enthusiasts talk about in their blog posts. But it's what real implementation in regulated industries looks like.

Extend Your Model Governance

You probably already have governance processes for AI models. Extend them to agents. Document training data provenance: what did this agent learn from? Validate that agents don't accumulate cross-boundary context over time. Conduct regular audits of agent behavior across compliance boundaries. Establish clear accountability: who owns the agent, who approved its deployment, who's responsible when it misbehaves?

Ask Vendors the Hard Questions

When evaluating A2A-compatible agent platforms, don't just ask about features. Ask about compliance:

- How do you handle data residency requirements?

- Can discovery be scoped by compliance boundaries?

- How do delegation chains appear in audit logs?

- Can we integrate our restricted list into delegation decisions?

- What training data provenance do you provide?

- How do you prevent context accumulation across boundaries?

- Do you support pre-delegation approval workflows?

- How would you explain an agent's actions to a regulator?

If the vendor looks at you blankly, that tells you something about their regulated industry experience.

Know What to Pilot Now vs. What to Wait On

Lower risk, pilot now:

- A2A within a single compliance zone (agents all within Retail Banking, for example)

- Non-client-facing internal automation

- Workflows that don't touch regulated data

- Agent orchestration where humans remain in the loop for sensitive decisions

Higher risk, wait for maturity:

- Cross-divisional agent collaboration across information barriers

- Client-facing autonomous agents in regulated products

- Agents handling material non-public information

- Cross-border agent delegation involving personal data

The protocol will mature. The compliance ecosystem around it will develop. You don't have to be first.

Engage Regulators Early

For highly regulated functions, consider sandbox testing with regulator awareness. Document your compliance approach before deployment. Build evidence that information barriers are maintained at the agent level. Be prepared to explain delegation chains to auditors.

Regulators aren't inherently hostile to innovation. They're hostile to surprises. Bringing them along on the journey is almost always better than asking for forgiveness later.

The Bottom Line

A2A is a genuinely important protocol that addresses real enterprise needs. The backing it has from major technology companies, its governance through the Linux Foundation, and its thoughtful design for enterprise security all suggest it has staying power. For many organizations, it will be transformative.

But "break down silos" isn't universally good advice. In regulated industries, some silos are features, not bugs. They're not organizational inefficiency to be optimized away. They're compliance controls mandated by law, enforced by regulators, and carrying penalties.

The current A2A specification is necessary but not sufficient for compliance in regulated industries. If you work in financial services, healthcare, legal, government, or any heavily regulated sector, you'll need to build compliance layers on top of the protocol. You'll need to advocate for compliance features in future specification versions. You'll need to accept that some A2A use cases simply won't be viable in your context. And you'll need to maintain human-in-the-loop processes for high-stakes cross-boundary decisions.

This isn't a reason to avoid A2A. It's a reason to implement it thoughtfully, with clear eyes about where the protocol's current capabilities end and where your compliance requirements begin.

The "break down all silos" narrative makes for great conference talks and compelling blog posts. But in the real world of regulated industries, the question isn't just "can our agents talk to each other?" It's "should they?" And sometimes, the answer is no.